Our blog

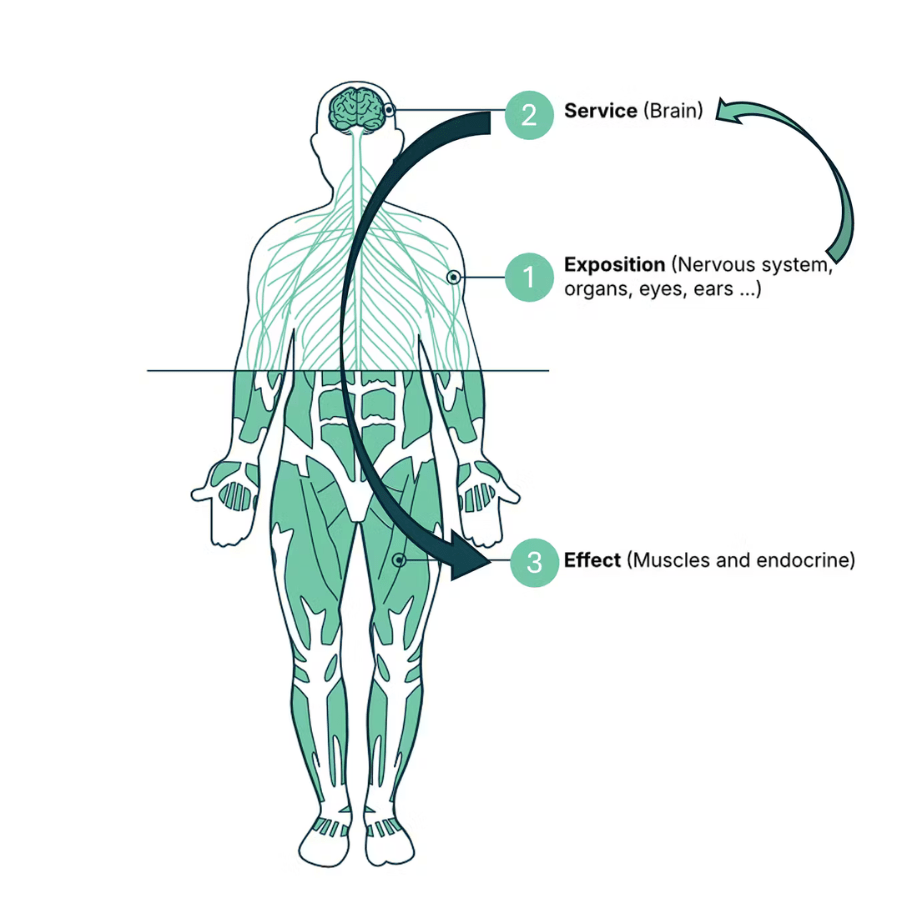

In previous articles, I’ve hinted at our ESE architecture and how it stands apart from the traditional MVC setup. Now it’s time to dig in and explore what makes ESE both unique and refreshingly simple. It’s a structure inspired by the way our human bodies function.

The Human Body Analogy

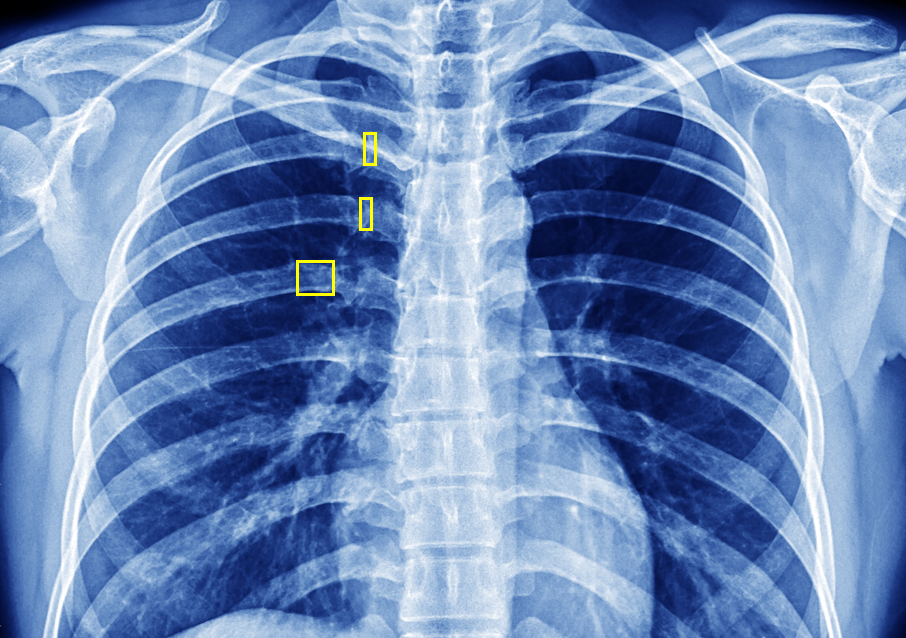

Let’s take a break from talking about software and think about the human body for a moment.

- Exposition: This layer is like our sensory organs—eyes, ears, skin—and the nervous system. It gathers information from the outside world and sends it to the brain for processing. Just as our senses relay external stimuli to the brain, the Exposition layer channels external stimuli into the system.

- Service: The brain! It processes the information received, makes decisions, and determines what actions must be taken. Similarly, the Service layer is the core of the system, where the business logic resides.

- Effect: Think of this layer as the muscles and endocrine system. When the brain decides to move a hand or release a hormone, these systems carry out the action. In the ESE architecture, the Effect layer executes the outcomes decided by the Service layer and dispatches events to keep the entire system—or “world”—informed. We strive to keep these actions atomic and free from conditional logic as much as possible.

Understanding ESE: Exposition, Service, Effect

Now, back to the world of computers. At its core, ESE (Exposition-Service-Effect) is a backend architecture that emphasizes a clean separation of concerns, making it easier to manage, test, and scale the different parts of a system. But what exactly do these layers do?

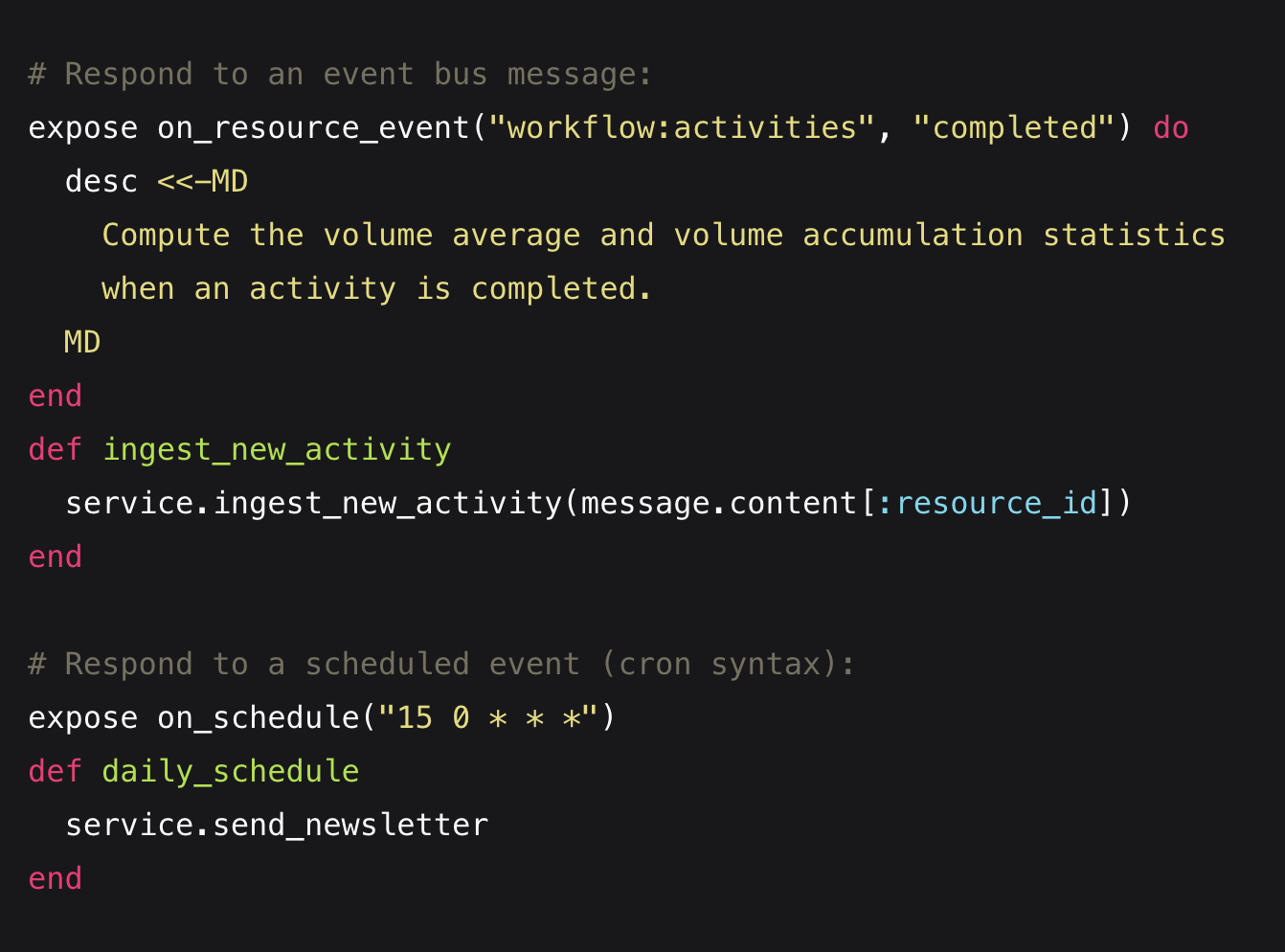

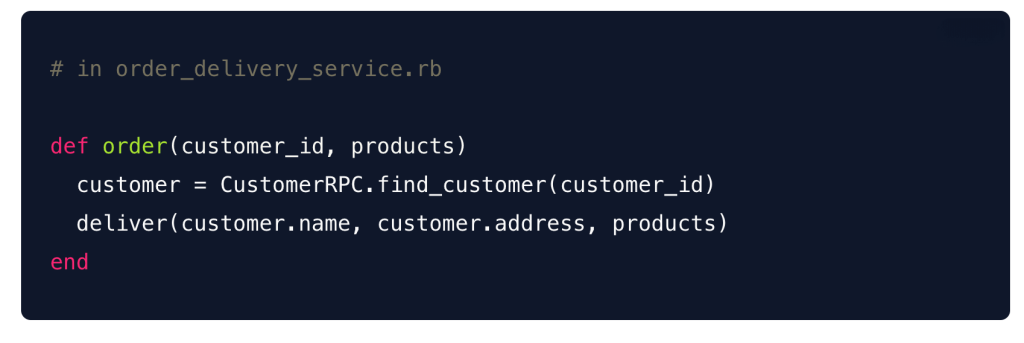

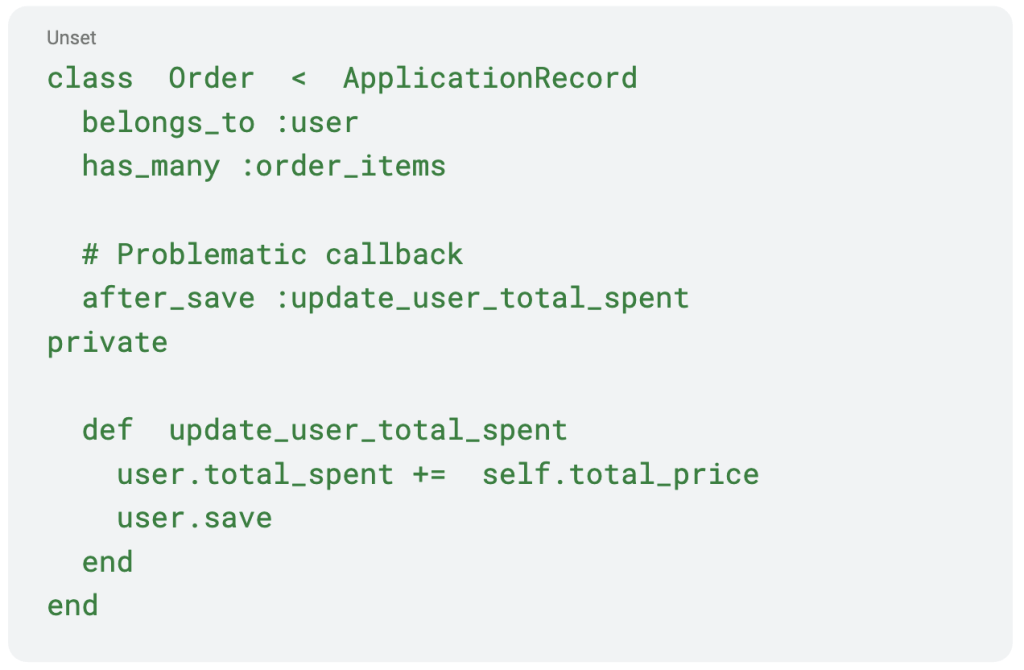

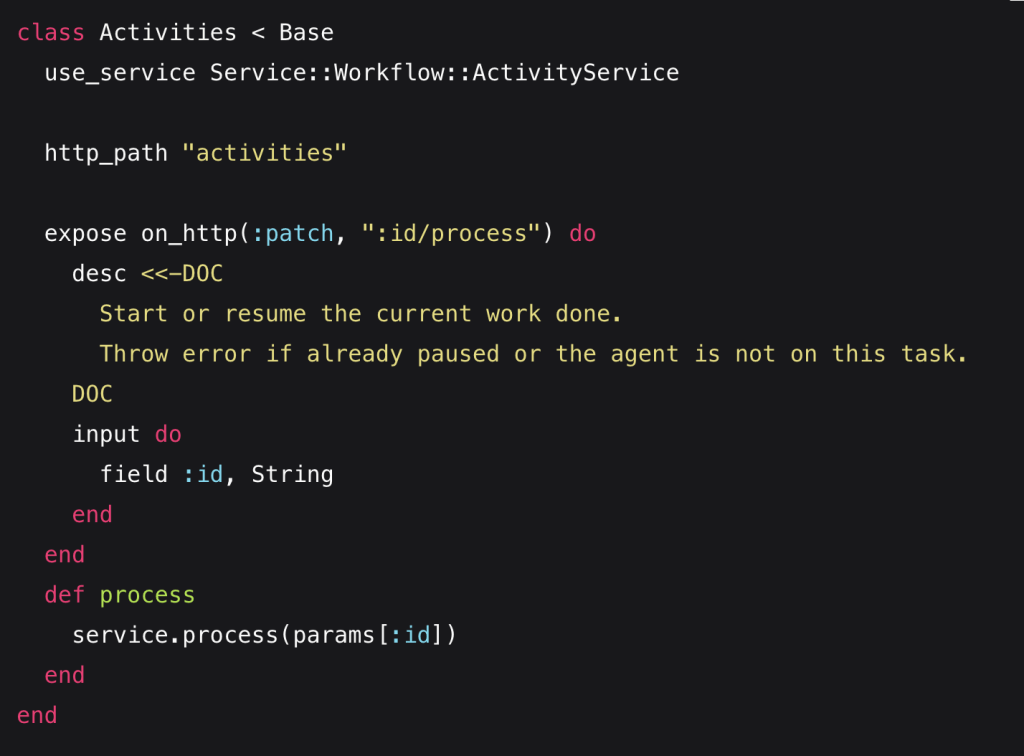

- Exposition: This layer handles the outward-facing aspects of the system, like reacting to HTTP requests or any events. It’s the entry point where an external system or a user interact with the backend, effectively exposing the system’s functionalities to the world. The Exposition layer offers hooks, which allow you to plug code into events happening in the world. For example:

This structure replaces the traditional MVC controller:

In a typical MVC controller, you’d find both input management and business logic combined. The controller might directly interact with models, handle business logic, and render views. In the ESE example above, the Exposition layer only manages the request and delegates all logic to the Service layer. This leads to a cleaner separation of concerns, making the system more modular and easier to maintain.

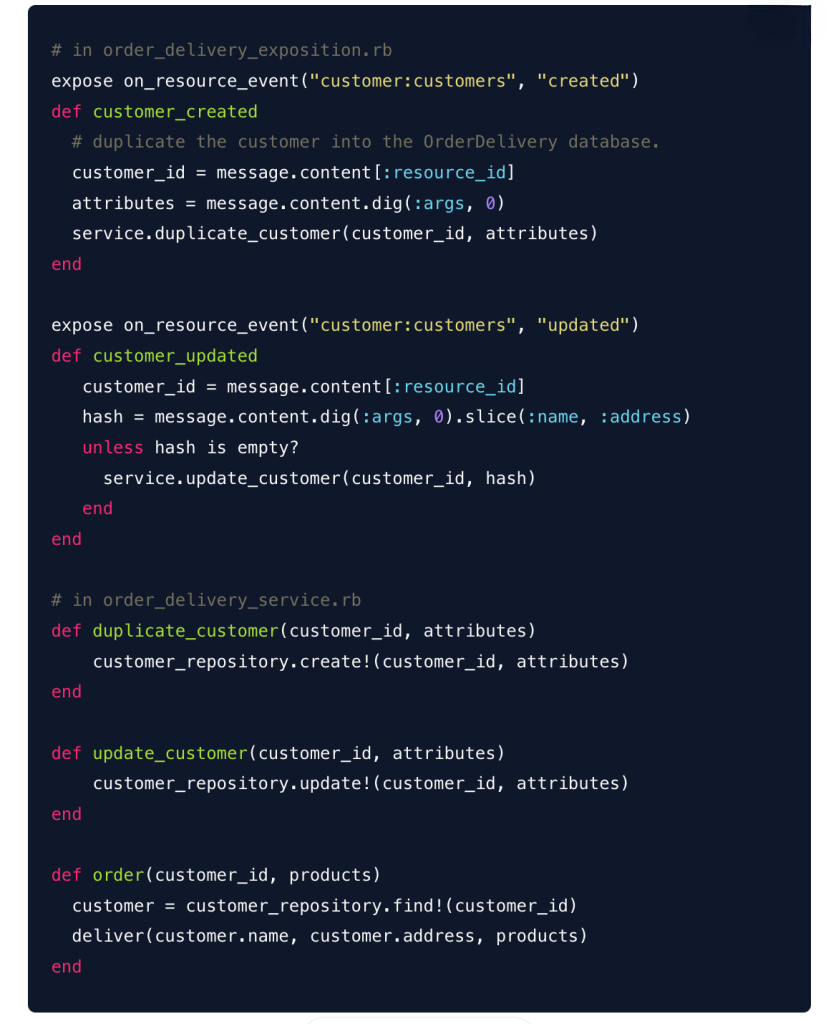

Another key feature is that the Exposition layer can handle multiple sources of events and protocols:

From a development perspective, I was never satisfied with having to handle different input sources in different ways. The Exposition layer streamlines this by centralizing all inputs and dispatching to the Service layer, whether it’s an HTTP response, an event, a background job, or a cron task.

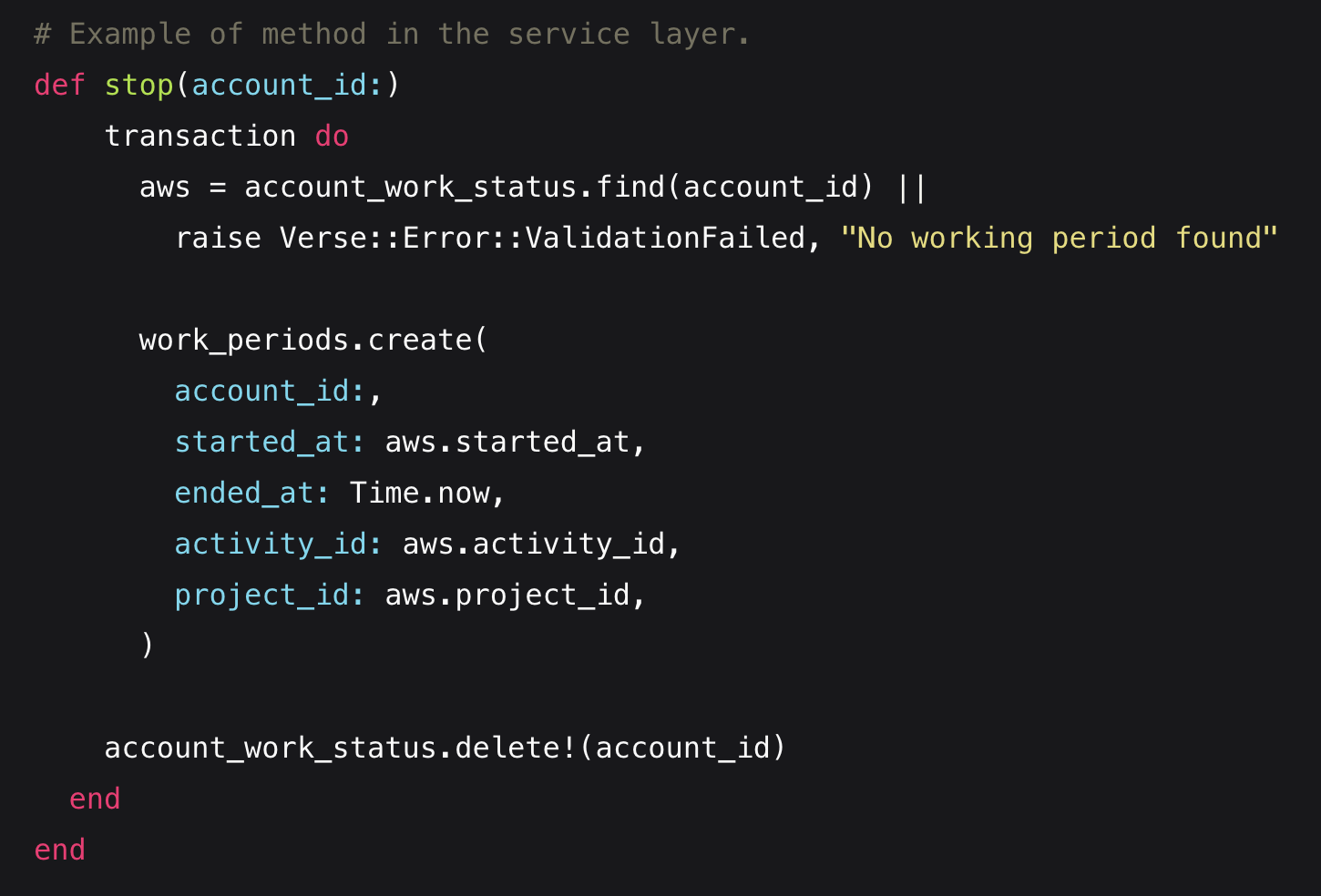

- Service: The Service layer is where you design your service objects, set up in-memory structures, and define error types, constants, conditions, and business rules. It is the brain of the system, processing input from the Exposition layer and applying the core business logic. It’s where decisions are made.

In Pulse and Verse, we provide a base Service class (Verse::Service::Base) that handles the authorization context (similar to current_user in Rails) and passes it to the Effect layer, which is in charge of dealing with access rights. But that’s a topic for another article on our approach to authorization management.

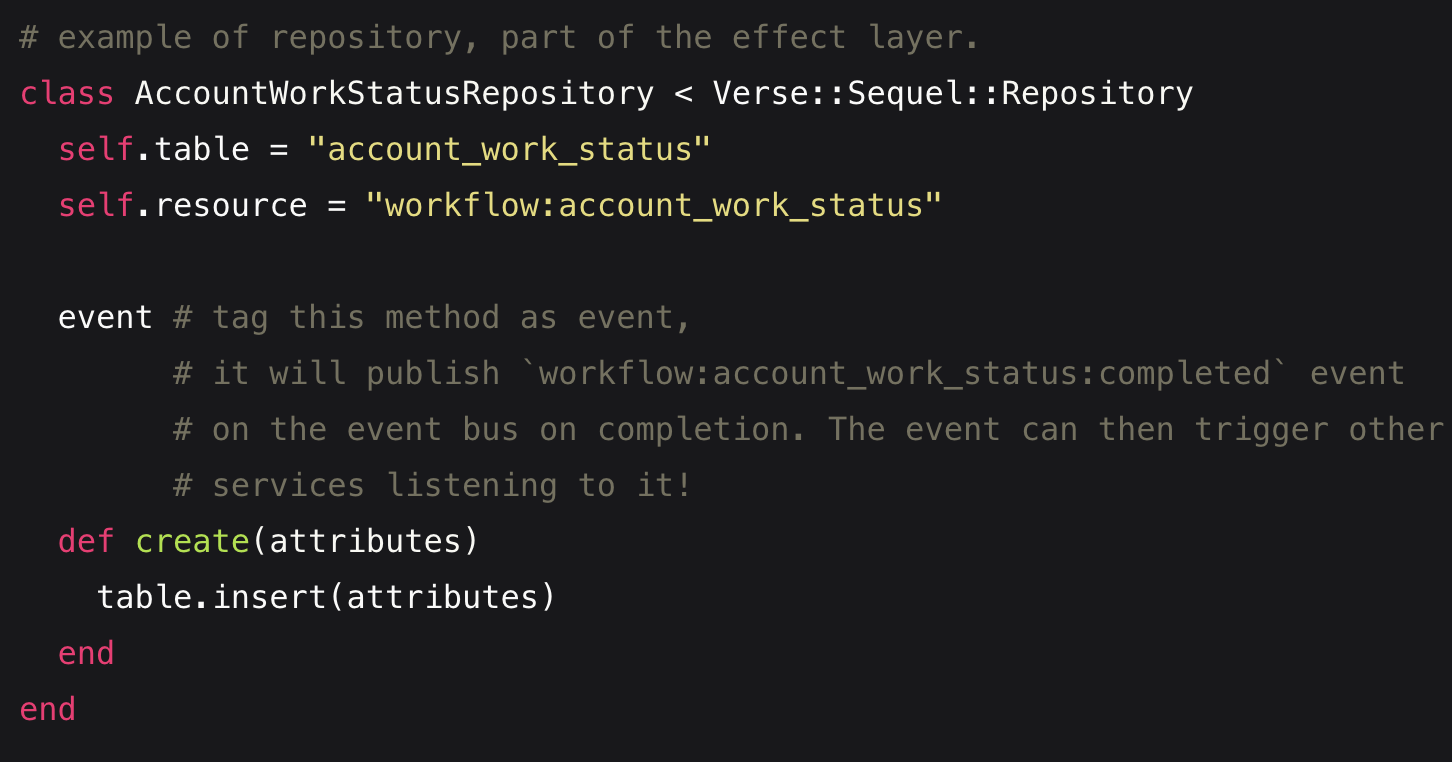

- Effect: Called by the Service layer, the Effect layer takes over to perform mutative operations. This might involve reading or updating a database, calling an API endpoint, publishing events, sending notifications, or any other actions resulting from the service’s operations. Here’s the twist: for every successful write operation, an event must be published to the Event Bus. So, when a new record is created, a records:created event is automatically emitted—thanks to Verse’s built-in functionality.

ESE vs MVC, key differences

You might be wondering how ESE compares to the more traditional MVC (Model-View-Controller) architecture. While both aim to separate concerns, they approach it differently.

In MVC:

- The Model manages data and business logic, often combining the two. It hides underlying complexity, such as querying the data, and can lead to performance or security issues.

- The View handles the presentation layer, directly interacting with the Model to display data.

- The Controller acts as a mediator, processing user input and updating the View.

In contrast, ESE is more modular:

- The Exposition layer handles all interactions with the outside world, acting as the system’s senses. It is also the place where we render the output, for events such as HTTP requests.

- The Service layer focuses purely on business logic and is separated from data concerns.

- The Effect layer manages the tangible outcomes and communicates these changes to the system, akin to how muscles execute actions.

This separation leads to cleaner, more maintainable code and makes it easier to scale complex systems.

A major pain point in MVC is having models handle both logic and effects, especially with mixed-level teams of developers. For instance, mixing query building with business code is a bad practice because it’s hard to determine query performance, as the query might be built incrementally within the business logic.

Another significant advantage of ESE is testing. You can easily replace the Effect layer with a mock version to test if your logic holds up, resulting in tests that run in microseconds without dependencies on other systems, instead of seconds. This is especially valuable in large applications, where this difference can translate to saving up to 45 minutes a day in productivity. And yes, this is a number I’ve experienced.

In Summary

We opted for ESE over MVC because it offers a more modern and modular approach to handling complex backend systems. By separating business logic from data concerns and outcomes, ESE allows us to build systems that are easier to manage, test, and scale.

Stay Tuned

In the next article, we’ll dive into why we chose Redis Stream over NATS, RabbitMQ, or Kafka for maintaining our Event Bus. Stay tuned!