Our blog

The ESG Backlash

Why Ground Truth in Data is Essential for Societal Trust

The recent challenges facing ESG (Environmental, Social, and Governance) are not sudden shocks but the inevitable outcome of a foundation built more on elite consensus than broad societal trust. ESG’s rapid expansion masked a dangerous fragility—one that confused compliance with genuine commitment and mistook policy momentum for true legitimacy.

At the heart of the problem lies a failure to connect ESG goals with real-world benefits. Instead of translating sustainability into tangible impacts, such as job creation, community development, and economic well-being—the conversation became lost in complex taxonomies, disclosure frameworks, and net-zero targets. This disconnect created a vacuum that critics filled by portraying ESG as an out-of-touch, elitist agenda.

This is not merely a public relations issue. Sustainability is inherently political, involving difficult trade-offs and resource decisions. Ignoring this reality left ESG vulnerable to backlash.

Where does data quality fit into rebuilding trust in ESG?

The path forward requires viewing ESG as a systemic and strategic political economy project that builds broad coalitions. But these coalitions must rest on an unshakeable foundation of truth—truth found in reliable data.

For too long, ESG data has suffered from inconsistencies, estimations, and self-reported disclosures lacking rigorous validation. This “wild west” of data has fueled skepticism and weakened trust. If the data itself is unreliable, societal buy-in becomes impossible.

Ingedata’s Expertise and Perspective on ESG Data Quality

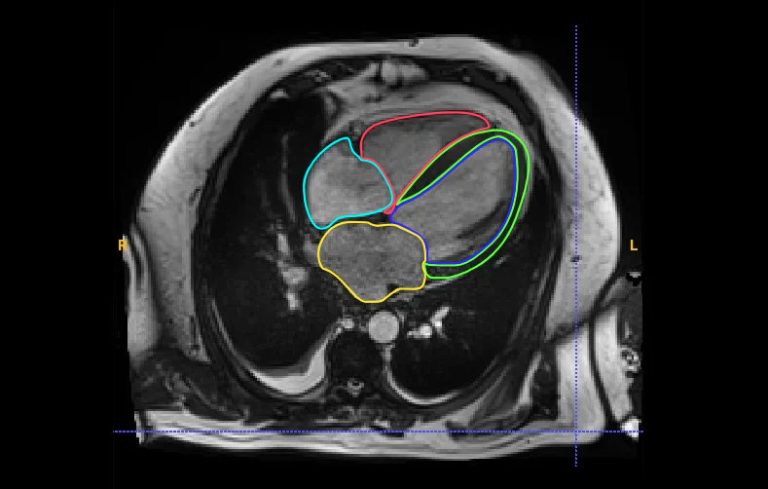

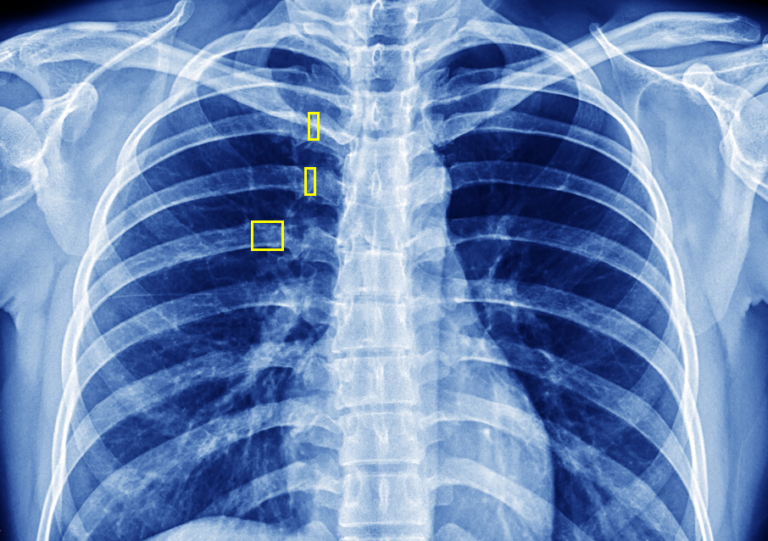

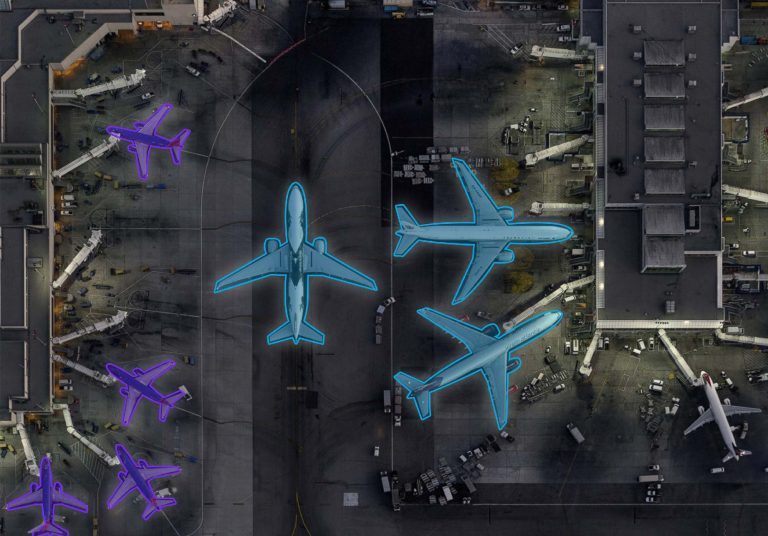

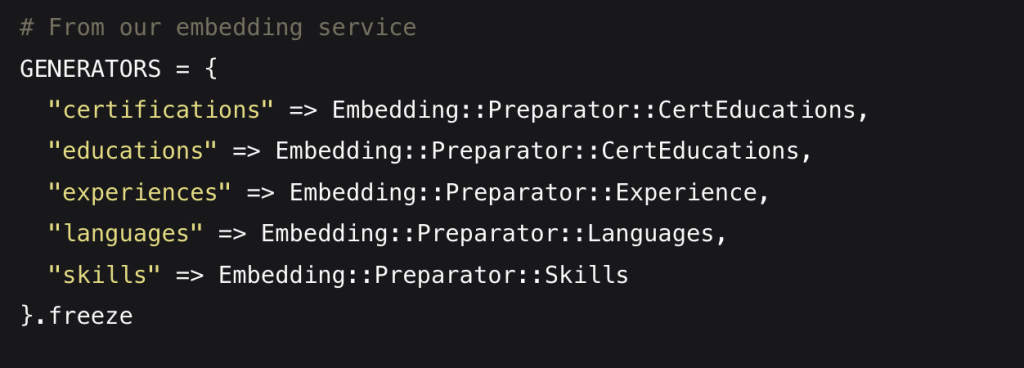

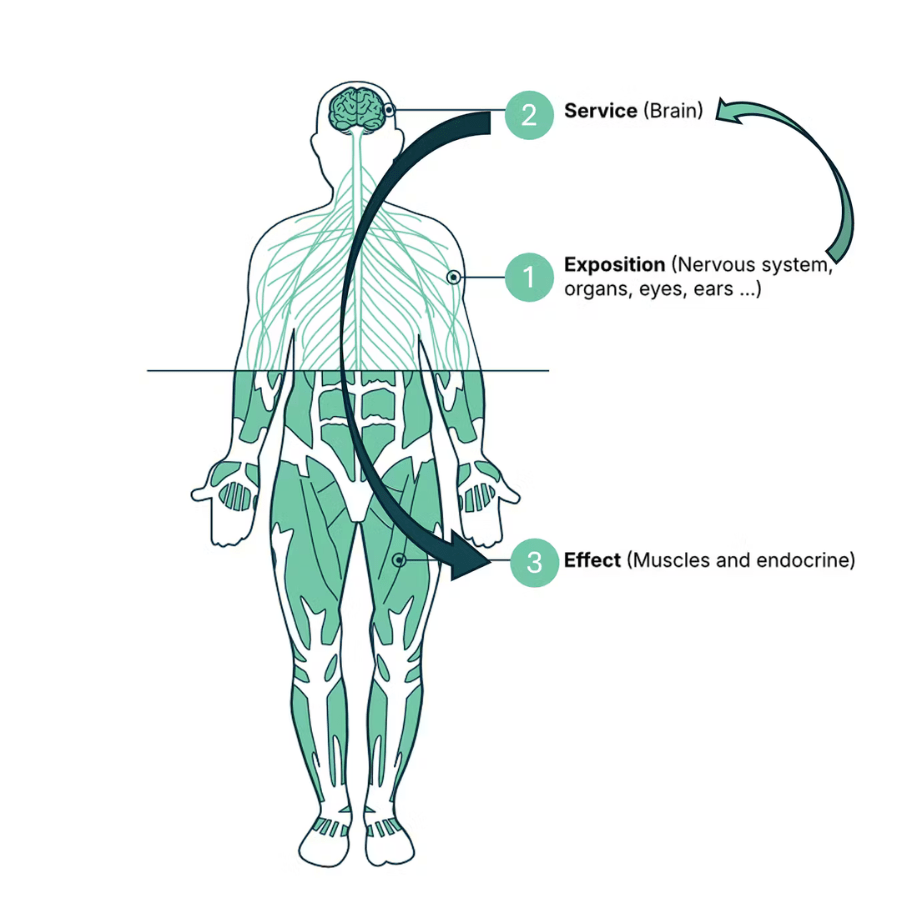

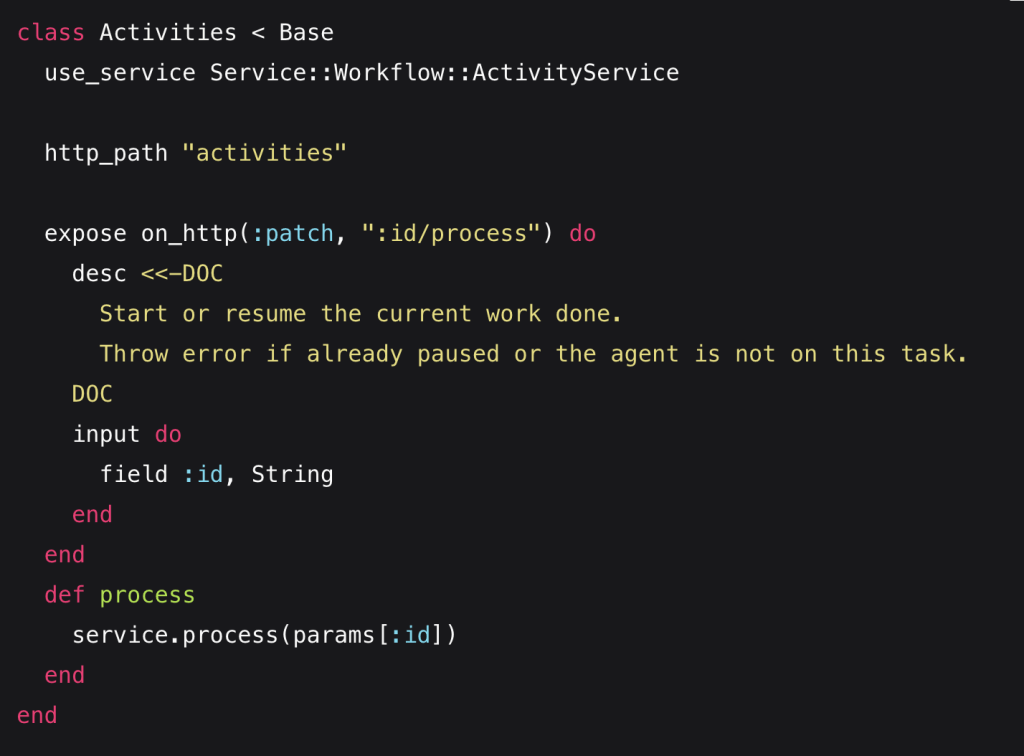

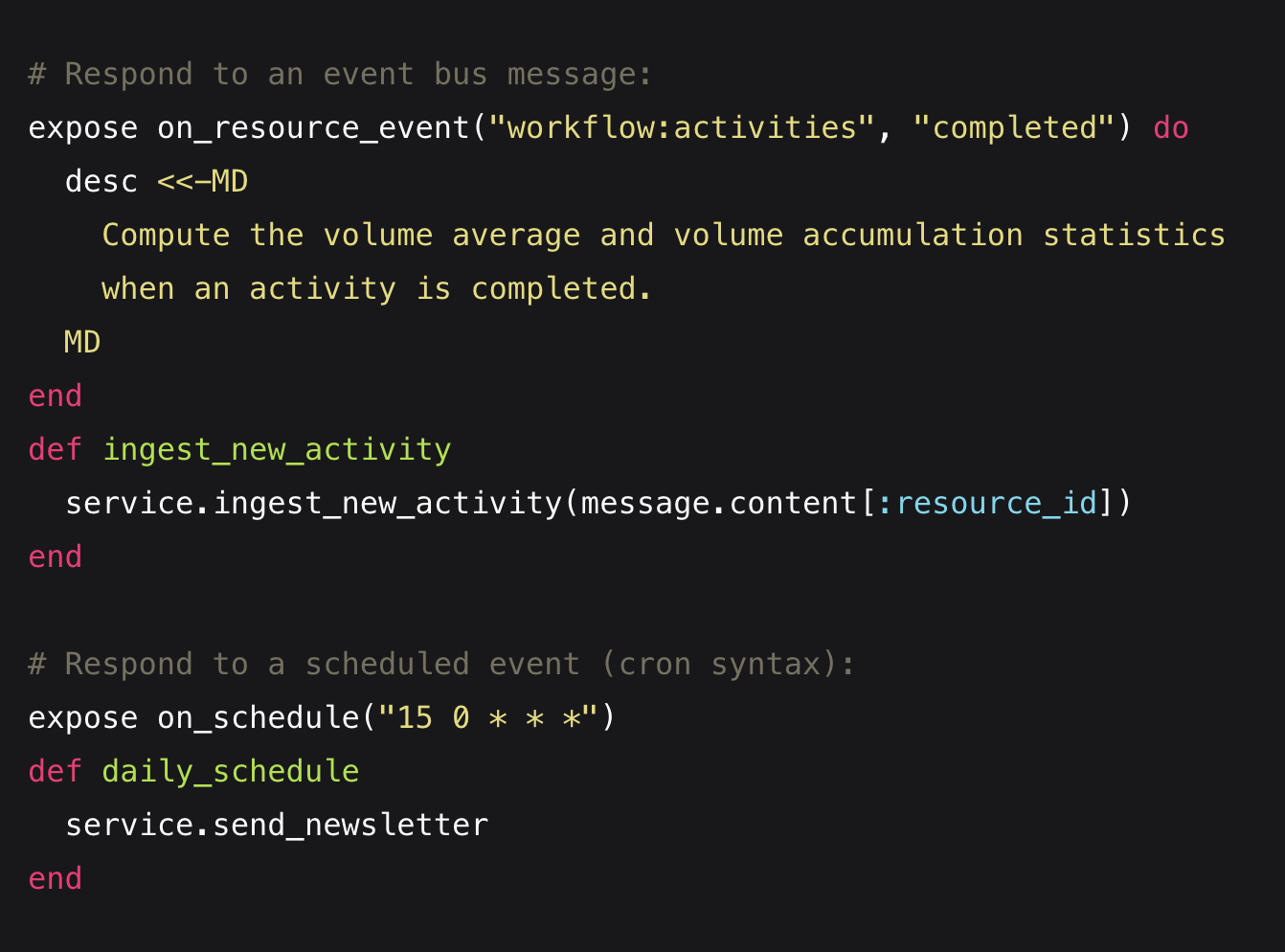

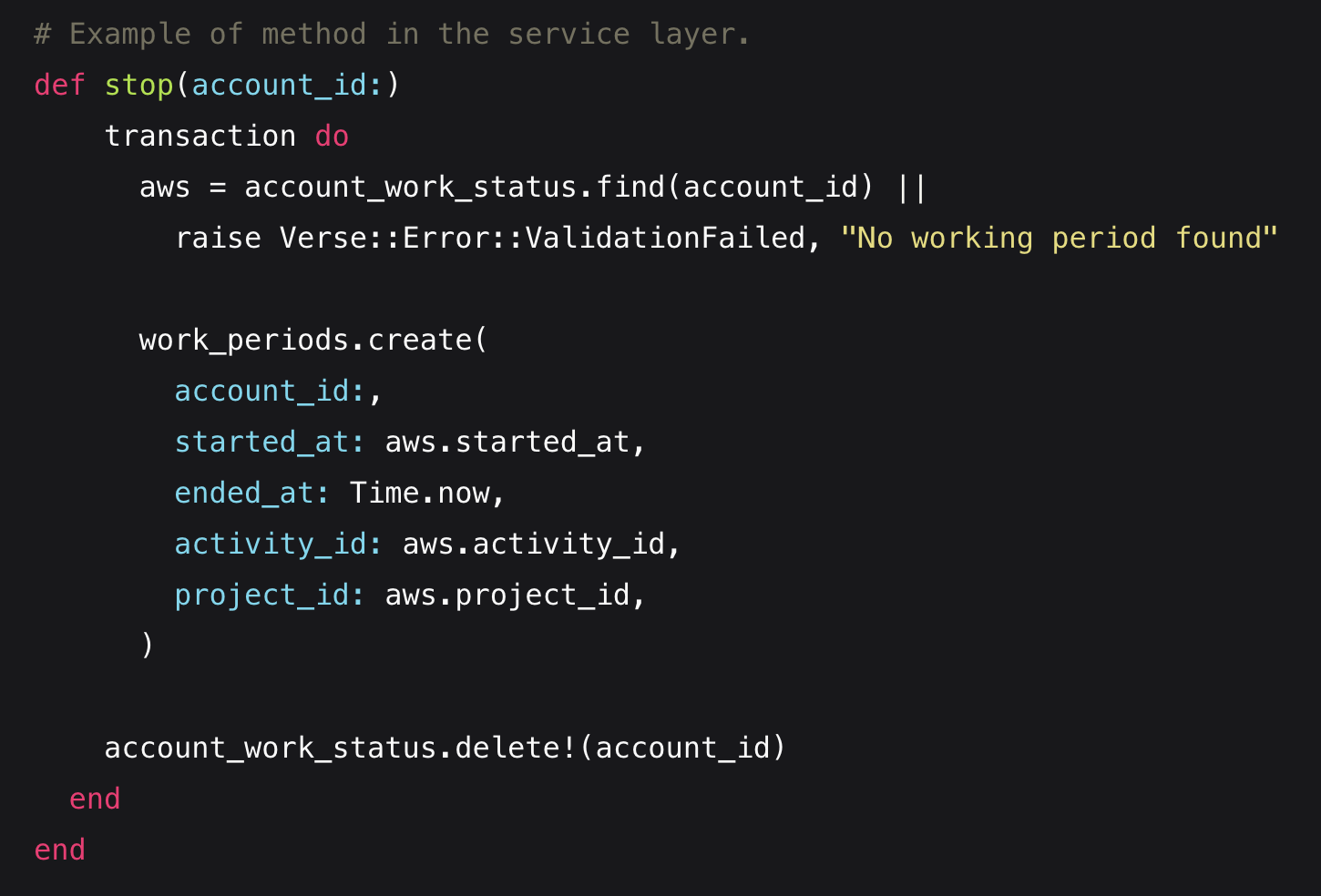

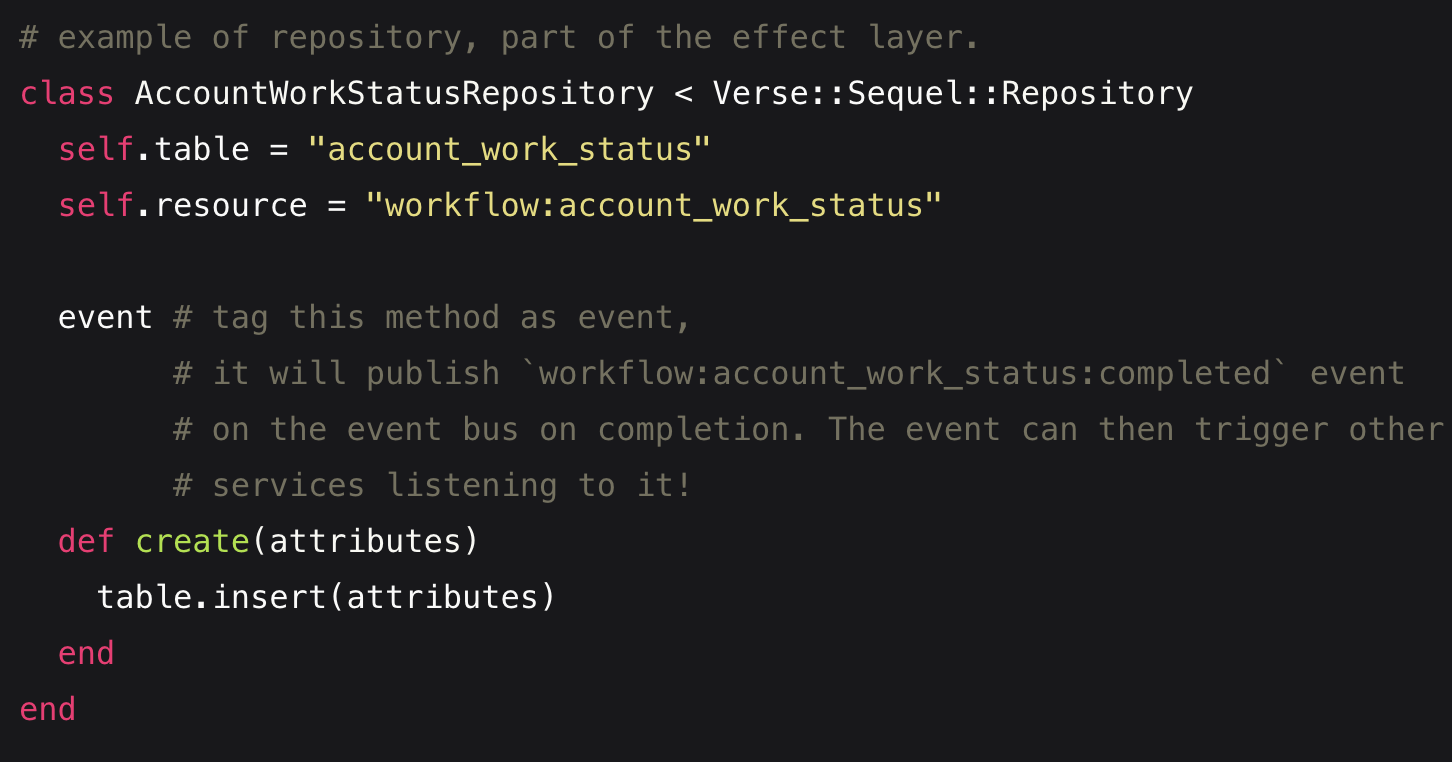

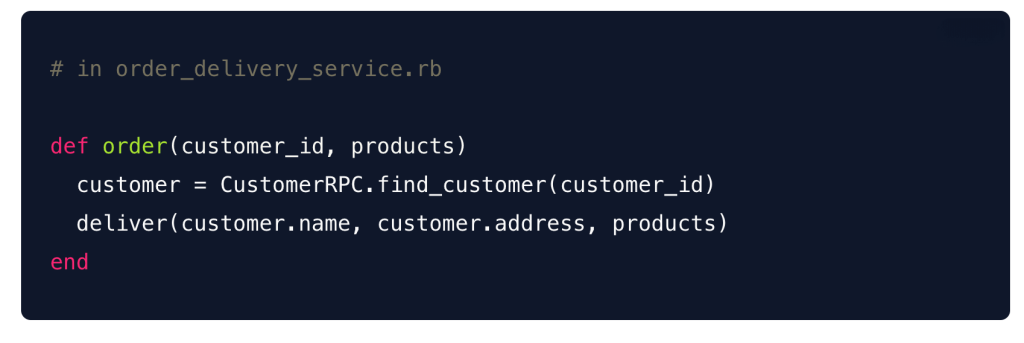

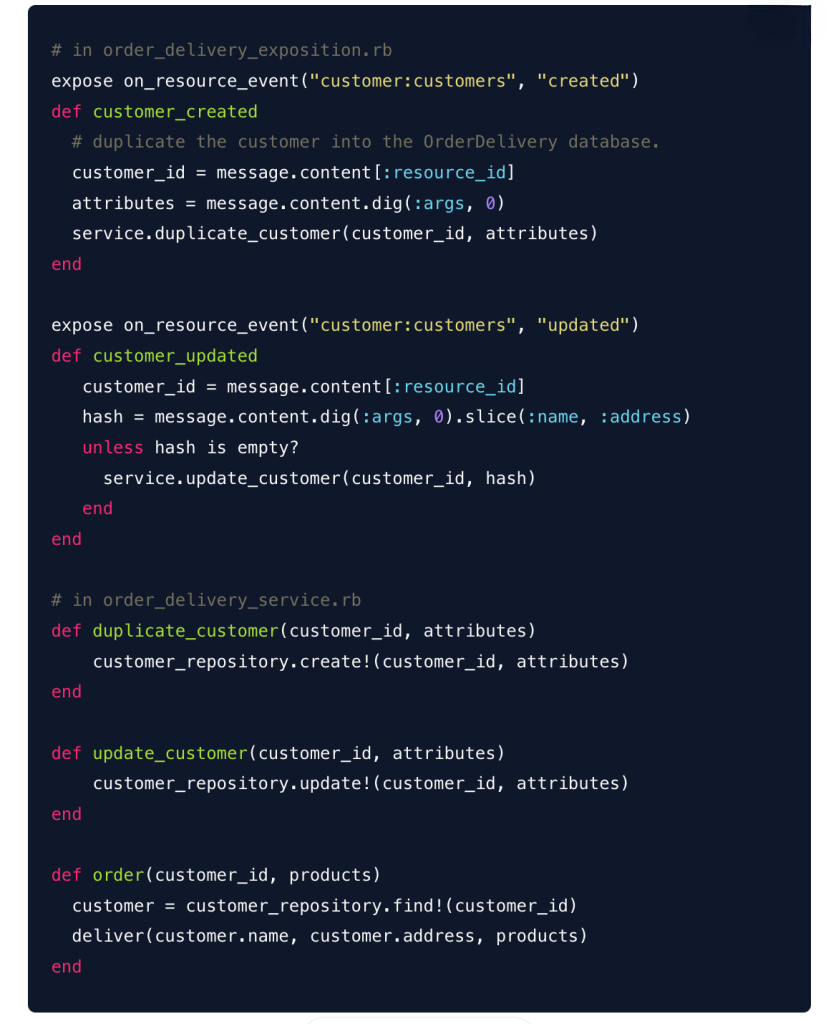

At Ingedata, we understand that trustworthy ESG strategies start with reliable, high-quality data. Our expertise lies in combining advanced AI technologies with expert human validation through our human-in-the-loop approach. This ensures data accuracy and context that automated systems alone cannot achieve.

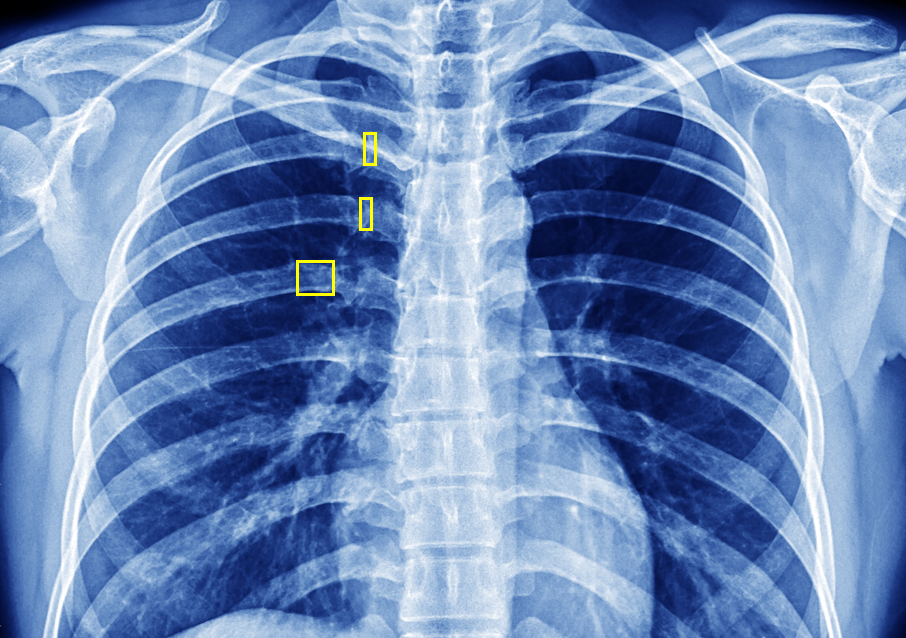

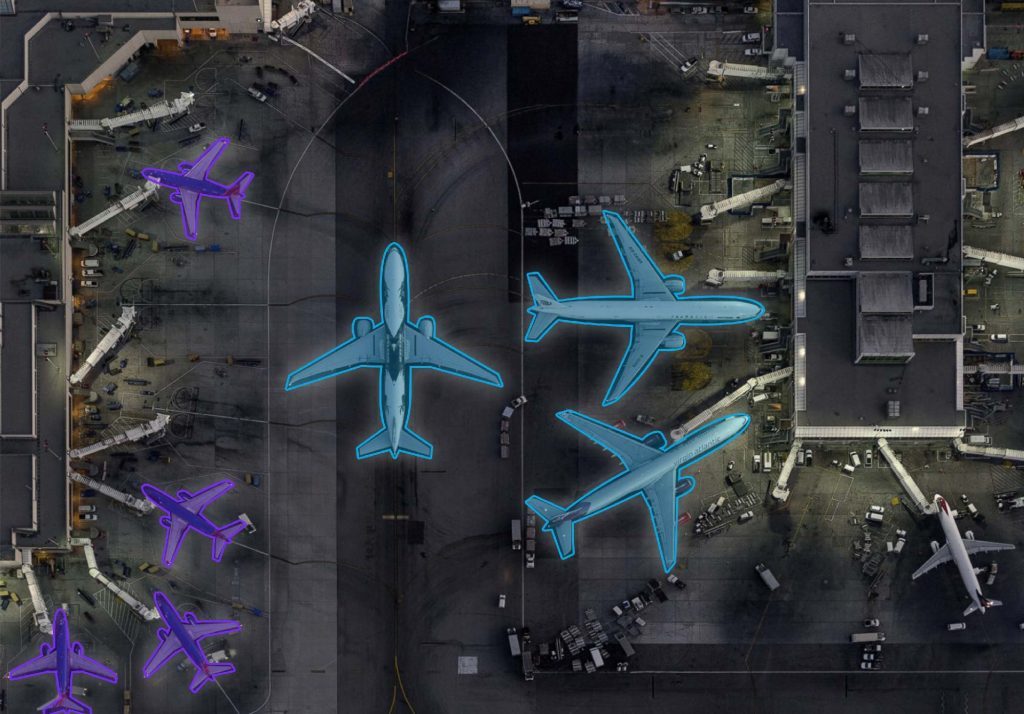

We have consistently delivered exceptional precision—achieving 99.81% accuracy on over 11 million validated observables across diverse sectors such as Earth observation, industrial inspection, and financial data processing. This experience demonstrates our ability to provide scalable, consistent data solutions critical to ESG initiatives.

Our perspective is that ESG efforts must be rooted in transparent and verifiable data to build genuine societal trust. We believe ESG is more than compliance; it’s a collaborative project requiring broad coalitions built on truth. By ensuring that the underlying data reflects real-world impacts—such as environmental changes, community development, and economic benefits—we enable organizations to craft credible narratives and support effective policymaking.

At Ingedata, we see ourselves as partners in the journey toward sustainable progress, committed to delivering the highest quality data annotation and validation services. We firmly believe that in data lies truth—and getting that data right is essential to transforming ESG into a movement with lasting societal impact.

Why this matters for ESG

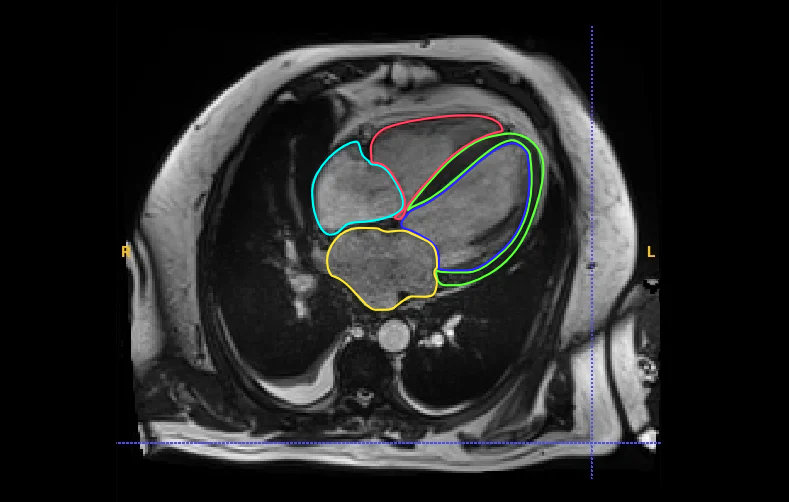

- Credibility and Transparency: To transform ESG from a compliance exercise into a legitimate project, underlying data must be unimpeachable. This requires human oversight to validate and classify impacts with precision, ensuring data reflects real conditions. Imagine applying satellite image analysis accuracy to verify deforestation claims or renewable energy impacts.

- Authentic Narratives: Genuine ESG stories must be grounded in verifiable data. Claims about green jobs or community benefits require robust, validated socio-economic data, not broad statements. Our expert-refined data enables organizations to build credible, compelling narratives that resonate.

- Building Trustworthy Coalitions: Broad participation in ESG efforts depends on trust. Labor unions, civic groups, and citizens engage only when data is transparent, fair, and reflective of their concerns. Our commitment to data quality and transparency signals that trust.

- Effective Policy Making: Policymakers rely on reliable data to craft impactful ESG regulations. Flawed data leads to ineffective or counterproductive policies. The human-in-the-loop approach ensures complex nuances are captured, supporting stronger governance.

Though the ESG consensus has faced setbacks, the urgent need for sustainable progress remains. Rebuilding this requires an unwavering commitment to truth—investing in the essential but often overlooked task of ensuring data quality. While AI processes vast data, human expertise remains vital for accuracy, context, and building trust.

At Ingedata, we see ourselves as gatekeepers of this future. We believe that “in data lies truth.” By delivering the highest quality data annotation and validation services, we empower organizations to develop ESG strategies that are credible, compelling, and capable of securing genuine societal buy-in.

The future of ESG depends on humility, wider alliances, and relentless focus on legitimacy. And it all starts with getting the data right.

#ESG #DataQuality #Sustainability #SocietalTrust #HumanInTheLoop #SupervisedLearning #Ingedata