In software development, we’re often taught to follow the DRY principle (Don’t Repeat Yourself) religiously. The idea that data should exist in exactly one place is deeply ingrained in our programming culture. Yet, in real-world systems, particularly in microservice architectures, strict adherence to this principle can lead to complex dependencies, performance bottlenecks, and fragile systems.

This article explores why duplicating data across multiple domains, while seemingly counterintuitive, can actually be the right architectural choice in many scenarios.

Before we dive into the benefits of intentional data duplication, it’s worth noting that duplication already happens at virtually every level of computing—often without us even realizing it.

At the hardware level, modern CPUs contain multiple layers of cache (L1, L2, L3) that duplicate data from main memory to improve access speed. Your RAM itself is a duplication of data from persistent storage. Even within storage devices, technologies like RAID mirror data across multiple disks for redundancy.

When you’re running a program, the same piece of data might simultaneously exist in:

Each level trades consistency management for performance gains. This is duplication by design!

Operating systems maintain file system caches, page caches, and buffer caches—all forms of data duplication designed to improve performance. Virtual memory systems duplicate portions of RAM to disk when memory pressure increases.

Consider what happens when multiple applications open the same file:

That’s three copies of the same data, all serving different purposes.

Web browsers cache resources locally. Content Delivery Networks (CDNs) duplicate website assets across global edge locations. Database replicas maintain copies of the same data for read scaling and disaster recovery.

When you visit a website, the same image might exist:

Modern frameworks implement various caching strategies that duplicate data: HTTP response caching, object caching, computed value memoization, and more. Each represents a deliberate choice to trade consistency for performance.

The reality is that data duplication isn’t an anti-pattern—it’s a fundamental strategy employed throughout computing to balance competing concerns like performance, availability, and resilience.

In traditional monolithic applications, we strive for normalized database schemas where each piece of data exists in exactly one place. This approach works well when:

However, as systems grow and evolve toward distributed architectures, these assumptions break down:

A common pattern in microservice architectures is to use event-driven mechanisms to duplicate and synchronize data across service boundaries. Let’s examine a typical example:

Consider a system with two separate services:

Both services need access to person data like names and contact information. Rather than having the HR service constantly query the IAM service, each maintains its own copy of the data.

A typical implementation might use an event-driven approach:

This pattern can be implemented with a helper like this (pseudocode):

# Example of a DuplicateFieldHelper in a Ruby-based system

def duplicate_fields (source_event_type, target_repository, mapping)

# Subscribe to events from the source service

subscribe_to_event(source_event_type) do |event|

# Extract the ID of the changed record

record_id = event.resource_id

# Find the corresponding record in the local repository

local_record = target_repository.find_by_source_id(record_id)

# Only update fields that were changed in the event

changed_fields = event.changed_fields

fields_to_update = mapping.select { |source_field, _|

changed_fields.include?(source_field)

}

# Update the local copy with the new values

target_repository.update(

local_record.id,

fields_to_update.transform_values { |target_field|

event.data[target_field]

}

)

end

end

# Usage example

duplicate_fields

“users.updated” ,

EmployeeRepository

{

first_name: :first_name,

last_name: :last_name,

email: :contact_email,

profile_picture: :photo_url

}

)

This pattern allows services to maintain local copies of data while ensuring they eventually reflect changes made in the authoritative source.

By duplicating data, each service can operate independently without runtime dependencies on other services. If the IAM service is temporarily unavailable, the HR service can still function with its local copy of employee data.

Local data access is always faster than remote calls. By keeping a copy of frequently accessed data within each service, we eliminate network round-trips and reduce latency.

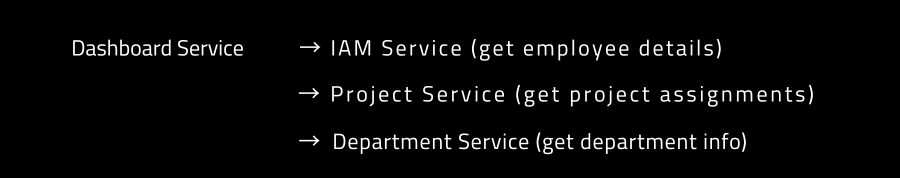

Consider a dashboard that displays employee information and their current projects. Without data duplication, rendering this dashboard might require:

With data duplication, it becomes:

The performance difference can be dramatic, especially at scale.

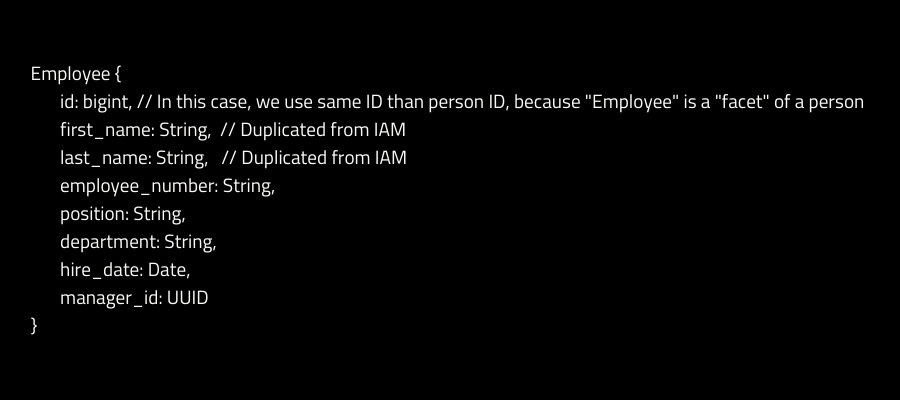

Each service can model data according to its specific domain needs. The HR service can add employment-specific fields without affecting the IAM service’s data model.

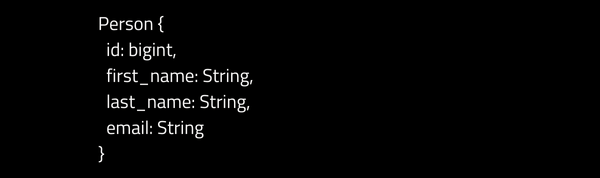

For example, the IAM service might store basic name information:

While the HR service might enhance this with employment details:

If one service fails, others can continue operating with their local data. This creates a more robust system overall, as temporary outages in one service don’t cascade throughout the entire system.

If one service fails, others can continue operating with their local data. This creates a more robust system overall, as temporary outages in one service don’t cascade throughout the entire system.

Services can scale independently based on their specific load patterns, without being constrained by dependencies on other services.

A key principle in successful data duplication is establishing clear ownership. A common approach is the “master-duplicate” pattern:

This pattern provides a structured approach to data duplication that maintains data integrity while gaining the benefits of duplication.

The DRY principle remains valuable, but like all principles, it should be applied with nuance. Consider these perspectives:

“DRY is about knowledge duplication, not code duplication.” — Dave Thomas, co-author of The Pragmatic Programmer

“Duplication is far cheaper than the wrong abstraction.” — Sandi Metz

In microservice architectures, some level of data duplication is not just acceptable but often necessary. The key is to duplicate deliberately, with clear ownership and synchronization mechanisms.

Remember that DRY was conceived in an era dominated by monolithic applications. In distributed systems, strict adherence to DRY across service boundaries often leads to tight coupling—precisely what microservices aim to avoid.

Data duplication makes sense when:

The DRY principle remains valuable, but like all principles, it should be applied with nuance. Consider these perspectives:

Challenge: Keeping duplicated data in sync can be complex.

Mitigation: Use event-driven architectures with clear ownership models. Implement idempotent update handlers and reconciliation processes.

Challenge: Duplicating data increases storage needs (but it is often a no-problem).

Mitigation: Be selective about what data you duplicate. Often, only a subset of fields needs duplication.

Challenge: Developers need to understand which service owns which data.

Mitigation: Clear documentation and conventions. Use tooling to visualize data ownership and flow.

Challenge: Applications must handle the reality that data might be temporarily stale. Events can be dropped, code can fail to update, etc. It needs often to be handled through maintenance task sets or use of ACLs.

Mitigation: Design UIs and APIs to gracefully handle eventual consistency. Consider showing “last updated” timestamps where appropriate. Write maintenance scripts that flag and correct data discrepancies once in a while.

Consider an e-commerce platform with these services:

The Order Service needs product information to display order details. Options include:

Option 3 is often the best compromise. When a product is updated in the Product Catalog Service, an event is published, and the Order Service updates its local copy of the relevant fields.

Data duplication across multiple domains may feel like breaking the rules, but it’s often a pragmatic solution to real-world challenges in distributed systems. By understanding when and how to duplicate data effectively, we can build systems that are more resilient, performant, and maintainable.

The next time someone invokes the DRY principle to argue against data duplication, remember that even your CPU is constantly duplicating data to work efficiently. Sometimes, the right architectural choice is to embrace controlled duplication rather than fight against it.

As with most engineering decisions, the answer isn’t about following rules dogmatically—it’s about understanding tradeoffs and choosing the approach that best serves your specific requirements.

This article is part of our series on API design and microservice architecture. Stay tuned for more insights into how we’ve built a scalable, maintainable system.

Proudly awarded as an official contributor to the reforestation project in Madagascar

(Bôndy - 2024)